We’ve been so busy in 2016 we’ve barely had time to announce what we’ve done. We have tracked it however at our riveting Changelog, which we invite you to peruse as soon as humanly (or, if you choose, robotically) possible. Continue reading

Articles by: John Davi

John runs everything product for Diffbot. Drop him a line at john at diffbot if you have questions.

From the Changelog: Product API Improvements, Custom API Management, Article Categorization

We’ve had a busy start to 2016. Here are some of the highlights from our January Changelog:

From the Changelog: Crawlbot Updates

Another year almost down, but we’re sneaking out some last-minute updates in the dregs of 2015.

The latest highlights from our Changelog include a host of updates for our intelligent crawler, Crawlbot:

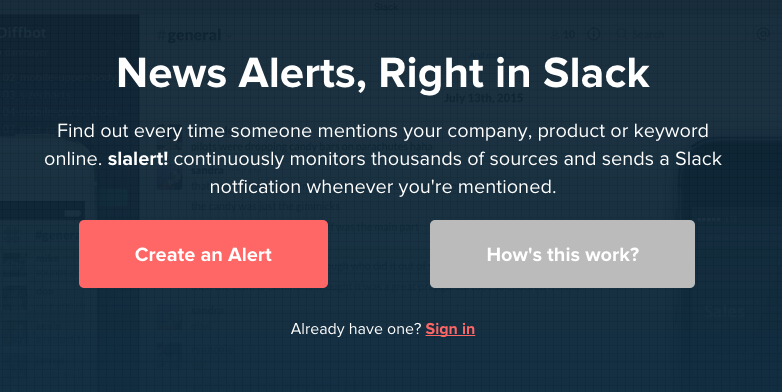

Realtime News Alerts in Slack: Slack + Alert = Slalert!

Diffbot is a happy user of Slack, the increasingly ubiquitous group-chat service. Its most distinguishing feature is broad support for third-party integrations—everything from status alerts to trouble-ticket updates to credit card transactions to popular GIFs can be piped into a Slack “channel” for immediate updates.

Video: Crawling Basics and Advanced Techniques for Web Site Data Extraction

Just for the visual and auditory learners — and/or those of you who prefer their web crawling with the dulcet tones of yours truly — a couple of Crawlbot tutorials to help you get up and running:

Crawlbot Basics

A quick overview of Crawlbot using the Analyze API to automatically identify and extract products from an e-commerce site.

Advanced Usage

This tutorial discusses some of the methods for narrowing your crawl within a site, and setting up a repeat or recurring crawl.

Related links:

- Various Ways to Control Your Crawlbot Crawls for Web Data (blog.diffbot.com)

- Crawlbot Support

Various Ways to Control Your Crawlbot Crawls for Web Data

In 2013 we welcomed Matt Wells, founder of Gigablast (and henceforth known as our grand search poobah) aboard to head up our burgeoning crawl and search infrastructure. Since then we’ve released Crawlbot 2.0, our Bulk Service/Bulk API, and our Search API — and are hard at work on more exciting stuff.

Crawlbot 2.0 included a number of ways to control which parts of sites are spidered, both to improve performance and to make sure only specific data is returned in some cases. Here’s a quick overview of the various ways to control Crawlbot.

Create a Searchable Archive with the Bulk Service and Search API

A common use for Diffbot APIs: build an index of structured content for easy and precise searching. This post walks through the most simple way to do that using our Bulk Processing Service and Search API.

Crawling News Sites for New Articles and Extracting Clean Text

One of the more common uses of Crawlbot and our article extraction API: monitoring news sites to identify the latest articles, and then extracting clean article text (and all other data) automatically. In this post we’ll discuss the most straightforward way to do that.

Analyzing Consumer Marketplaces Using Crawlbot and the Product API

Miles Grimshaw of Thrive Capital recently used Crawlbot and our Product API to analyze product availability and extract pricing data from a number of online fashion marketplaces — to help determine the scale, margins, customer profile and trends of each site, and to inform their investment decision-making.

Miles writes about his experience and analysis on his blog. Nice Diffbotting, Miles!

Article API: Returning Clean and Consistent HTML

We’ve long offered HTML as a response element in our Article API (as an alternative to our plain-text text field). This is useful for maintaining inline images, text formatting, external links, etc.

Until recently, the HTML we returned was a direct copy of the underlying source, warts and all — which, if you work with web markup, you’ll know tilts heavily toward the “warts” side. Now though, as many of our long-waiting customers have started to see, our html field is now returning normalized markup according to our new HTML Specification.

You must be logged in to post a comment.