Some websites already know that you want their data and want to help you out.

Twitter, for example, figures you might want to track some social metrics, like tweets, mentions, and hashtags. They help you out by providing developers with an API, or application programming interfaces.

There are more than 16,000 APIs out there, and they can be helpful in gathering useful data from sites to use for your own applications.

But not every site has them.

Worse, even the ones that do don’t always keep them supported enough to be truly useful. Some APIs are certainly better developed than others.

So even though they’re designed to make your life simpler, they don’t always work as a data-gathering solution. So how do you get around the issue?

Here are a few things to know about using APIs and what to do if they’re unavailable.

APIs: What Are They and How Do They Work?

APIs are sets of requirements that govern how one application can talk to another. They make it possible to move information between programs.

For example, travel websites aggregate information about hotels all around the world. If you were to search for hotels in a specific location, the travel site would interact with each hotel site’s API, which would then show available rooms that meet your criteria.

On the web, APIs make it possible for sites to let other apps and developers use their data for their own applications and purposes.

They work by “exposing” some limited internal functions and features so that applications can share data without developers having direct access to the behind-the-scenes code.

Bigger sites like Google and Facebook know that you want to interact with their interface, so they make it easier using APIs without having to reveal their secrets.

Not every site has (or wants) to invest the developer time in creating APIs. Smaller ecommerce sites, for example, may skip creating APIs for their own sites, especially if they also sell through Amazon (who already has their own API).

Challenges to Building APIs

Some sites just may not be able to develop their own APIs, or may not have the capacity to support or maintain them. Some other challenges that might prevent sites from developing their own APIs include:

- Security – APIs may provide sensitive data that shouldn’t be accessible by everyone. Protecting that data requires upkeep and development know-how.

- Support – APIs are just like any other program and require maintenance and upkeep over time. Some sites may not have the manpower to support an API consistently over time.

- Mixed users – Some sites develop APIs for internal use, others for external. A mixed user base may need more robust APIs or several, which may cost time and money to develop.

- Integration – Startups or companies with a predominantly external user-base for the API may have trouble integrating with their own legacy systems. This requires good architectural planning, which may be possible for some.

Larger sites like Google and Facebook spend plenty of resources developing and support their APIs, but even the best-supported APIs don’t work 100% of the time.

Why APIs Aren’t Always Helpful for Data

If you need data from websites that don’t change their structure a lot (like Amazon) or have the capacity to support their APIs, then you should use them.

But don’t rely on APIs for everything.

Just because an API is available doesn’t mean it always will be. Twitter, for example, limited third-party applications’ use of its APIs.

Companies have also shut down services and APIs in the past, whether because they go out of business, want to limit the data other companies can use, or simply fail to maintain their APIs.

Google regularly shuts down their APIs if they find them to be unprofitable. Two examples of which include the late Google Health API and Google Reader API.

While APIs can be a great way to gather data quickly, they’re just not reliable.

The biggest issue is that sites have complete control over their APIs. They can decide what information to give, what data to withhold, and whether or not they want to share their API externally.

This can leave plenty of people in the lurch when it comes to gathering necessary data to run their applications or inform their business.

So how do you get around using APIs if there are reliability concerns or a site doesn’t have one? You can use web scraping.

Web Scraping vs. APIs

Web scraping is a more reliable alternative to APIs for several reasons.

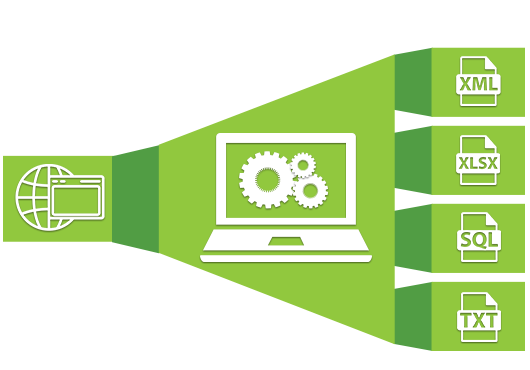

Web scraping is always available. Unlike APIs, which may be shut down, changed or otherwise left unsupported, web scraping can be done at any time on almost any site. You can get the data you need, when you need it, without relying on third party support.

Web scraping gives you more accurate data. APIs can sometimes be slow in updating, as they’re not always at the top of the priority list for sites. APIs can often provide out-of-date, stale information, which won’t help you.

Web scraping has no rate limits. Many APIs have their own rate limits, which dictate the number of queries you can submit to the site at any given time. Web scraping, in general, doesn’t have rate limits, and you can access data as often as possible. As long as you’re not hammering sites with requests, you should always have what you need.

Web scraping will give you better structured data. While APIs should theoretically give you structured data, sometimes APIs are poorly developed. If you need to clean the data received from your API, it can be time-consuming. You may also need to make multiple queries to get the data you actually want and need. Web scraping, on the other hand, can be customized to give you the cleanest, most accurate data possible.

When it comes to reliability, accuracy, and structure, web scraping beats out the use of APIs most of the time, especially if you need more data than the API provides.

The Knowledge Graph vs. Web Scraping

When you don’t know where public web data of value is located, Knowledge As a Service platforms like Diffbot’s Knowledge Graph can be a better option than scraping.

The Knowledge Graph is better for exploratory analysis. This is because the Knowledge Graph is constructed by crawls of many more sites than any one individual could be aware of. The range of fact-rich queries that can be constructed to explore organizations, people, articles, and products provides a better high level view than the results of scraping any individual page.

Knowledge Graph entities can combine a wider range of fields than web extraction. This is because most facts attached to Knowledge Graph entities are sourced from multiple domains. The broader the range of crawled sites, the better the chance that new facts may surface about a given entity. Additionally, the ontology of our Knowledge Graph entities changes over time as new fact types surface.

The Knowledge Graph standardizes facts across all languages. Diffbot is one of the few entities in the world to crawl the entire web. Unlike traditional search engines where you’re siloed into results from a given language, Knowledge Graph entities are globally sourced. Most fact types are also standardized into English which allows exploration of a wider firmographic and news index than any other provider.

The Knowledge Graph is a more complete solution. Unlike web scraping where you need to find target domains, schedule crawling, and process results, the Knowledge Graph is like a pre-scraped version of the entire web. Structured data from across the web means that knowledge can be build directly into your workflows without having to worry about sourcing and cleaning of data.

With this said, if you know precisely where your information of interest is located, and need it consistently updated (daily, or multiple times a day), scraping may be the best route.

Final Thoughts

While APIs can certainly be helpful for developers when they’re properly maintained and supported, not every site will have the ability to do so.

This can make it challenging to get the data you need, when you need it, in the right format you need it.

To overcome this, you can use web scraping to get the data you need when sites either have poorly developed APIs or no API at all.

Additionally, for pursuits that require structured data from many domains, technologies built on web scraping like the Knowledge Graph can be a great choice.

You must be logged in to post a comment.