Reviews are a veritable gold mine of data. They’re one of the few times when unsolicited customers lay out the best and the worst parts of using a product or service. And the relative richness of natural language can quickly point product or service providers in a nuanced direction more definitively than quantitative metrics like time on site, bounce rate, or sales numbers.

The flip side of this linguistic richness is that reviews are largely unstructured data. Beyond that, many reviews are written somewhat informally, making the task of decoding their meaning at scale even harder.

Restaurant reviews are known as being some of the richest of all reviews. They tend to document the entire experience: social interactions, location, décor, service, price, and food.

This provides an extra challenge for the programmatic appraisal of reviews. Some reviews may focus entirely on service. While others reference only food. Restaurant reviews often tell a story. They may be about an anniversary or a birthday. Of all product types, food is one we may have the widest range of experience with. And restaurants are appraised in a matrix of other food establishments, special trips, family gatherings, and personal cooking skill (e.g. “how my mother used to make this dish”).

Given all of these angles that could provide valuable feedback for restauranteurs (or any field that gains many reviews), we wanted to see how our knowledge organization tools could help create useful signals out of this unstructured data.

Related Content

The Process

To begin with we needed all of the raw text of a given restaurant’s reviews. We chose Michelin reviewed (Bib Gourmand choice) restaurant Virtue, a southern American restaurant located in Hyde Park in Chicago.

We first used our Extraction APIs to grab all of the text from each review of the restaurant on Yelp.

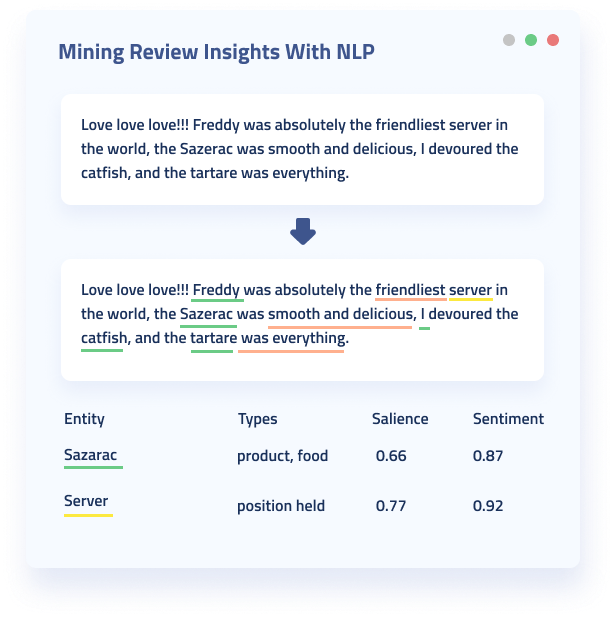

Then we passed reviews to our Natural Language API, a best-in-class NLP tool that is great for identifying entities within unstructured text as well as giving them a sentiment and salience score.

You can see this process visually if you head to our NL API demo page. Paste a review in the pane in the upper left corner and entities, salience, and sentiment should emerge (more on this later).

While you could build out the results programmatically. For the sake of an initial test of our NL API on restaurant review data, we did most of this process manually. And you can follow along with a restaurant of your choice if you so choose.

The Results

First up, we were able to pull out the highest and lowest sentiment towards food items on the menu. Sentiment scores range from -1 (very negative) to 1 (very positive).

Interestingly, only one food element had an average negative sentiment (this is a Michelin reviewed restaurant). In this case “glaze.” Looking at Virtue’s menu, a “brown sugar glaze” is part of their salmon. As this is an outlier, however, it makes sense to gather a bit of context.

All entities pulled out by the NL API provide data on where they were found in the original text. In this case, it’s a reviewer using a word that doesn’t show up in the menu to describe another item. To quote, “for dessert, the lemon glazed pound cake was a little dry, next time I will try the chocolate cake.” The ability to take high level insights while ensuring data provenance is a key advantage of this natural language product.

This brings us to another feature of the NL API: salience. Salience is how central an entity is to an understanding of the text. Scores range from 0 to 1. And as this was but one small note in a long review, the entity “glaze” received a salience score of only .04. The single negative sentiment food item is basically negligible. In this case, the sentiment of the review as a whole was very positive with just one slightly sour note.

As for the highest sentiment food items on the menu. Looks like the wisdom of crowds thinks you should order the following:

- A French 75, Moscow Mule, Or a Sparkling Wine

- A gumbo starter

- Followed by Virtue’s Half Chicken w/sweet potatoes, brussel sprouts, raisins, lemon pepper seasoning

- For desert try the sweet potato pie w/whipped cream

While there are instances of nearly every dish on the menu hosting positive sentiment, interestingly the few listed above also had very high salience scores. In short, they defined the experience of the individual in the restaurant.

Location Entity Types

As mentioned previously, hospitality settings are often judged on a range of factors. Eating out is a sensory experience, often coupled with special moments in someone’s life. A trip, or the commemoration of an event. This shows through a range of qualitative inputs in reviews. One of which is how does the location “feel.”

Our NL API picked up on a distinct cluster of entities related to location for Virtue Restaurant’s reviews. Either the physical location that the restaurant is in. Or locations that the food and experience reminded patrons of. As a southern American (essentially high end soul food) restaurant, Virtue brought up comparisons to locations throughout the American south.

Location Entity | Salience | Sentiment

- Southern United States | .36 | .89

- Chiapas | .73 | .95

- New Orleans | .64 | .92

- Mississippi | .9 | .9

- Lower Alabama | .91 | .9

- Tennessee | .83 | .9

Clearly the restaurant does a solid job of expressing a connection to the locations from which their food style originates.

Additionally, location markers can be a bit closer to home. Hyde Park, the neighborhood in which Virtue is located held high sentiment. As well as mentions to the restaurant itself. When we dug into the actual text many reviews noted a nice ambiance and joy that the restaurant had moved into the neighborhood. These signals looked like the following:

Location Entity | Salience | Sentiment

- Hyde Park | .48 | .75

- Restaurant | .64| .39

- Bar | .05 | .13

- Chicago | .77 | .44

Pricing And Service Entity Types

Finally, logistical matters tend to surface in dining reviews (particularly if a restaurant does particularly well or poorly). Among our NL API results, a range of entities surfaced around a few issues.

First off, Virtue is a nice $$-$$$ restaurant. They’re also selling southern or soul food that in other locations may not be a particularly expensive meal. This led to a small cluster of reviews aimed at the price. Mining in a bit more we were able to see that this collection of reviews were often individuals thinking the price would be lower until they arrived at the restaurant.

Monetary Figure Entities | Salience | Sentiment

- All entities containing dollar signs | 0.01 | .06

With this said, a vast majority of these entities received salience close to 0. Reviewers may have noted that they were surprised by the price. But had a high sentiment time anyway! Overall, sentiment around monetary figures was relatively neutral, potentially suggesting Virtue is actually well priced.

Additionally, as with all restaurants, there will be a range in how patrons perceive service. Aside from a few minor incidents, reviews of service at Virtue are very positive and seemed to be fairly central to how individuals felt about their time in the restaurant (via high salience values).

Service or Staff Entities | Salience | Sentiment

- Erick Williams (Owner and Chef) | .34 | .7

- Owner | .01 | .37

- Server | .07 | .58

- Bartender | .03 | .98

- “Dave” | .41| .59

- “Bridgette” | .52 | .75

- “Fernando” | .82 | .99

- “Freddy” | .33 | .55

- “Jesus” | .65 | .8

- Manager | .02 | -.05

We should note that Diffbot does not have any affiliation with Virtue. And that this exercise is simply for illustration purposes. Besides the owner and chef Erick Williams who we were able to validate the name of online, these additional names are presumably names of staff at the restaurant, though could simply be individuals a reviewer was dining with.

Takeaways

We’ve worked through ways in which entities, sentiment, and salience can provide insight from unstructured review data at scale. Of note is the rich range of entities that individuals tend to reference in reviews. Additionally, the ability to preserve the context from which entities and facts are pulled allows for better understanding of what outlier data may mean. Take for example one of the few negative sentiments of any entities at Virtue, “manager.” When we looked for context around this -.05 sentiment, one can see the typical pattern of escalating an issue to a manager when something doesn’t go right in a hospitality setting. For context, this is based on two mentions of a manager from among over 3,000 total entities. Thus the salience of .02 (practically negligible).

Interested in seeing what AI can pull out of your reviews? Check out our web data extraction API test drive and our Natural Language API demo page to try this process out for yourself.

Looking for a more custom solution? Reach out to our data solutions team for a custom demo.

You must be logged in to post a comment.