Skip Ahead

- Sentiment Analysis

- Text Classification

- Chatbots & Virtual Assistants

- Text Extraction (Mining)

- Machine Translation

- Text Summarization

- Market Intelligence

- Intent Classification

- Urgency Detection

- Speech Recognition

- Search Autocorrect and Autocomplete

- Social Media Monitoring

- Web Data Extraction

- Machine Learning

- Threat Detection

- Fraud Detection

- Native Advertising

The Library of Alexandria was the pinnacle of the ancient world’s recorded knowledge. It’s estimated that it contained the scroll equivalent of 100,000 books. This was the culmination of thousands of years of knowledge that made it into the records of the time. Today, the Library of Congress holds much the same distinction, with over 170M items in its collection.

While impressive, those 170M items digitized could fit onto a shelf in your basement. Roughly 10 12 terabyte hard drives could contain the entirety.

For comparison, the average data center of today (there are 7.2M of them at last count) takes up an average of 100,000 square feet. Nearly every foot filled with storage.

With this much data, there’s no army of librarians in the whole world who could organize them…

Natural language processing refers to technologies and techniques that take unorganized data and provide meaning and structure at scale. Imagine taking a stack of documents on your desk, making them searchable, sortable, prioritizing them, or generating summaries for each. These are the sort of tasks natural language processing supports in business and research settings.

At Diffbot, we see a wide range of use cases using our benchmark-topping Natural Language API. We’ll work through some of these use cases as well as others supported by other technologies below.

Sentiment Analysis

These days, it seems as if nearly everyone online has an opinion (and is willing to share it widely). The velocity of social media, support ticket, and review data is astounding, and many teams have sought solutions to automate the understanding of these exchanges.

Sentiment analysis is one of the most widespread uses of natural language processing. This process involves determining how “positive” or “negative” a given text is. Common uses for sentiment analysis are wide ranging and include:

- Buyer risk

- Supplier risk

- Market intelligence

- Product intelligence (reviews)

- Social media monitoring

- Underwriting

- Support ticket routing

- Investment intelligence

While no natural language processing task is foolproof, studies show that analysts tend to agree with top-tier sentiment analysis services close to 85% of the time.

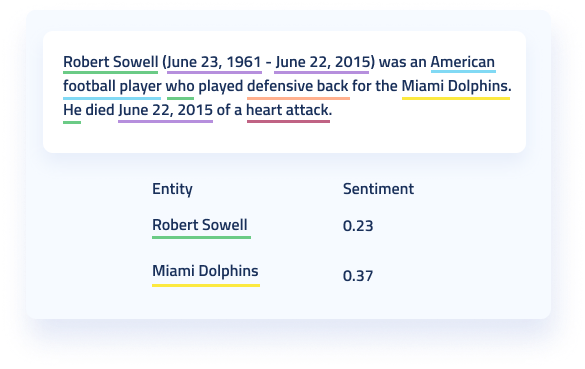

One categorical difference between sentiment analysis providers is that some provide a sentiment score for entire documents, while some providers can give you the sentiment of individual entities within the text. A second important factor about entity-level sentiment involves knowing how central an entity is to understanding the text. This measure is commonly called the “salience” of an entity.

Text Classification

Text classification can refer to a process internal to natural language processing tools in which text is grouped into related words and prepared for further analysis. Additionally, text (topic) classification can refer to the user output of greater business use.

The uses of text (topic) classification include ticket or call routing, news mention tracking, and providing contextuality to other natural language processing outputs. Text classification can function as an “operator” of sorts, routing requests to the person best suited to solve the issue.

Studies have shown that the average support worker can only handle around 20 support tickets a day. Text classification can dramatically increase the time before tickets reach the right support team member as well as provide this team member with context to solve an issue quickly. Salesforce has noted that 69% of high-performing support teams are considering the use of AI for ticket routing.

Additionally, you can think of text classification as one “building block” for understanding what is going on in bulk unstructured text. Text classification processes may also trigger additional natural language processing through identifying languages or topics that should be analyzed in a particular way.

Chatbots & Virtual Assistants

Loved by some, despised by others, chatbots form a viable way to direct informational conversations towards self service or human team members.

While historical chatbots have relied on makers plotting out ‘decision trees’ (e.g. a flow chart pattern where a specific input yields a specific choice), natural language processing allows chatbot users several distinct benefits:

- The ability to input a nuanced request

- The ability to type a request in informal writing

- More intelligence judgment on when to hand off a call to an agent

As the quality of chatbot interactions has improved with advances in natural language processing, consumers have grown accustomed to dealing with them. The number of consumers willing to deal with chatbots doubled between 2018 and 2019. And more recently it has been reported that close to 70% of consumers prefer to deal with chatbots for answers to simple inquiries.

Text Extraction (Mining)

Text extraction is a crucial functionality in many natural language processing applications. This functionality involves pulling out key pieces of information from unstructured text. Key pieces of information could be entities (e.g. companies, people, email addresses, products), relationships, specifications, references to laws or any other mention of interest. A second function of text extraction can be to clean and standardize data. The same entity can be referenced in many different ways within a text, as pronouns, in shorthand, as grammatically possessive, and so forth.

Text extraction is often a “building block” for many other more advanced natural language processing tasks.

Text extraction plays a critical role in Diffbot’s AI-enabled web scraping products, allowing us to determine which pieces of information are most important on a wide variety of pages without human input as well as pull relevant facts into the world’s largest Knowledge Graph.

Machine Translation

Few organizations of size don’t interface with global suppliers, customers, regulators, or the public at large. “Human in the loop” global news tracking is often costly and reliant on recruiting individuals who can read all of the languages that could provide actionable intelligence for your organization.

Machine translation allows these processes to occur at scale, and refers to the natural language processing task of converting natural text in one language to another. This relies on understanding the context, being able to determine entities and relationships, as well as understanding the overall sentiment of a document.

While some natural language processing products center their offerings around machine translation, others simply standardize their output to a single language. Diffbot’s Natural Language API can take input in English, Chinese, French, German, Spanish, Russian, Japanese, Dutch, Polish, Norwegian, Danish or Swedish and standardize output into English.

Text Summarization

Text summarization is one of a handful of “generative” natural language processing tasks. Reliant on text extraction, classification, and sentiment analysis, text summarization takes a set of input text and summarizes it. Perhaps the most commonly utilized example of text summarization occurs when search results highlight a particular sentence within a document to answer a query.

Two main approaches are used for text summarizing natural language processing. The extraction approach finds a sentence(s) within a text that it believes coherently summarizes the main points of the document. The abstraction approach actually rewrites the input text, removing points it believes are less important and rephrasing to reduce length.

The primary benefit of text summarization is the preserving of time for end users. In cases like question answering in support or search, consumers utilize text summarization daily. Technical, medical, and legal settings also utilize text summarization to give a quick high-level view of the main points of a document.

Market Intelligence

The range of data sources on consumers, suppliers, distributors, and competitors makes market intelligence incredibly ripe for disruption via natural language processing. Web data is a primary source for a wide range of inputs on market conditions, and the ability to provide meaning while absolving individuals from the need to read all underlying documents is a game changer.

Applied with web crawling, natural language processing can provide information on key market happenings such as mergers and acquisitions, key hires, funding rounds, new office openings, and changes in headcount. Other common market intelligence uses include sentiment analysis of reviews, summarization of financial, legal, or regulatory documents, among other uses.

Intent Classification

Intent classification is one of the most revenue-centered and actionable applications of natural language processing. In intent classification the input is direct communications from a prospect or customer. Using machine learning, intent classification tools can rate how “ready to buy” a given individual is during an interaction. This can prompt sales and marketing outreach, special offers, cross-selling, up-selling, and help with lead scoring.

Additionally, intent classification can help to route inquiries aimed at support or general queries like those related to billing. The ability to infer intentions and needs without even needing to prompt discussion members to answer specific questions enables for a faster and more frictionless experience for service providers and customers.

Urgency Detection

Urgency detection is related to intent classification, but with less focus on where a text indicates a writer is within a buying process. Urgency detection has been successfully used in cases such as law enforcement, humanitarian crises, and health care hotlines to “flag up” text that indicates a certain urgency threshold.

Because urgency detection is just one method — among others — in which communications can be routed or filtered, low or no supervision machine learning can often be used to prepare these functions. In instances in which an organization does not have the resources to field all requests, urgency detection can help them to prioritize the most urgent.

Speech Recognition

In today’s world of smart homes and mobile connectivity, speech recognition opens up the door to natural language processing away from written text. By focusing on high fidelity speech-to-text functionality, the range of documents that can be fed to natural language processing programs expands dramatically.

In 2020, an estimated 30% of all searches held a voice component. Applying natural language processing detailed in the other points in this guide is a huge opportunity for organizations providing speech-related capabilities.

Search Autocorrect and Autocomplete

Search autocorrect and complete may be the area most individuals deal with natural language processing most readily. In recent years, search on many ecommerce and knowledge base sites has been entirely rethought. The ability to quickly identify intent and pair it with an appropriate response can lead to better user experience, higher conversion rates, and more end data about what users want.

While 96% of major ecommerce sites employ autocorrect and/or autocomplete, major benchmarks find that close to 30% of these sites have severe usability issues. For some of the largest traffic volume sites on the web, this is a major opportunity to employ quality predictive search using cutting-edge natural language processing.

Social Media Monitoring

Of all media sources online, social can be the most overwhelming in velocity, range of tone and conversation type. Global organizations may need to field or monitor requests in many languages, on many platforms. Additionally, social media can provide useful inputs into external issues that may affect your organization, from geopolitical strife, to changing consumer opinion, to competitor intelligence.

On the customer service and sales fronts, 79% of consumers expect brands to respond within a day on social media requests. Recent studies have shown that across industries only 29% of brands regularly hit this mark. Additionally, the cost of finding new customers is 7x that of keeping existing customers, leading to increased need for intent monitoring and natural language processing of social media requests.

Web Data Extraction

Rule-based web data extraction simply doesn’t scale past a certain point. Unless you know the structure of a web page in advance (many of which are changing constantly), rules specified for which information is relevant to extract will break. This is where natural language processing comes into play.

Organizations like Diffbot apply natural language processing for web data extraction. By training natural language processing models around what information is likely useful by page type (e.g. product page, profile page, article page, discussion page, etc.), we can extract web data without pre-specified rules. This leads to resiliency in web crawling as well as enables us to expand the number of pages we can extract data from. This ability to crawl across many page types and continuously extract facts is what powers our Knowledge Graph. Interested in web data extraction? Be sure to check out our automatic extraction APIs or pre-extracted firmographic, demographic, and article data within our Knowledge Graph.

Machine Learning

While machine learning is often an input to natural language processing tools, the output of natural language processing tools can also jumpstart machine learning projects. Using automatically structured data from the web can help you skip time-consuming and expensive annotation tasks.

We routinely see our Natural Language API as well as Knowledge Graph data — both enabled with natural language processing technology — utilized to jump start machine learning exercises. There are few training data sets as large as public web data. And the range of public web data types and topics makes it a great starting point for many, many machine learning journeys.

Threat Detection

For platforms or other text data sources with high velocity, natural language processing has proven to be a good first line of defense for flagging hate speech, threatening speech, or false claims. The ability to monitor social networks and other locations at scale allows for the identification of networks of “bad actors” and a systemic protection from malicious actors online.

We’ve partnered with multiple organizations to help combat fake news with our natural language processing API, site crawlers, and Knowledge Graph data. Whether as a source for live structured web data or as training data for future threat detection tools, the web is the largest source of written harmful or threatening communications. This makes it the best location for training effective natural language processing tools used by non-profits, governmental bodies, media sites looking to police their own content, and other uses.

Fraud Detection

Natural language processing plays multiple roles in fraud prevention efforts. The ability to structure product pages is utilized by large ecommerce sites to seek out duplicate and fraudulent product offerings. Secondly, structured data on organizations and key members of these organizations can help to detect patterns in illicit activity.

Knowledge graphs — one possible output of natural language processing — are particularly well suited for fraud detection because of their ability to link distinct data types. Just as human research-enabled fraud investigations “piece together” information from varying sources and on various entities, Knowledge Graphs allow for machine accumulation of similar information.

Native Advertising

For advertising embedded in other content, tracking what context provides the best setting for ad placement allows for systems to generate better and better ad placement. Using web scraping paired with natural language processing, information like the sentiment of articles, mentions of key entities as well as which entities are most central to the text can lead to better ad placement.

Many brands suffer from underperforming advertising spending as well as brand safety (placement in suitable locations), problems that natural language processing helps to aid at scale.

You must be logged in to post a comment.